A couple weeks ago I was asked to look at system performance for a customer, and as part of my investigation I found a high read query. I had run a trace and filtered for anything over 10,000 reads and saw a query that required around 32,000 reads, and it was running at least once a second. I examined it further. It was a query against one table, for one presumably unique value in a column. I was suspicious. Based on the reads I guessed it was doing a table scan, even though I expected an index seek.

I ran the query in SSMS to see how many rows it returned. Three. I then ran the query with Include Actual Execution Plan enabled…it did an index seek. Well, the software runs the query differently than SSMS, so I grabbed the plan handle from the DMV and looked up the query plan that way. It was doing a full table scan against the table. I checked statistics…they had been updated with 100% sample that morning, and the value in the query existed in the histogram.

Then I checked the database options…Auto Update Statistics was disabled.

<sidebar>

Yes, I know that Kimberly Tripp recommends leaving Auto Update Statistics and Auto Create Statistics enabled…but she does say “It depends” in her post. So let me explain why we have traditionally recommended to customers that they disable the Auto Update Statistics option:

- We do not want statistics updating during production hours if at all possible; we want the customer to manage statistics through a regularly scheduled job.

- We want statistics updated with 100% sample because very often the distribution in critical tables is skewed. With Auto Update Statistics enabled, the statistics do not update with 100% sample unless the table is less than 8 MB in size.

I know, there are many discussions we can have about whether this is the “best” thing to recommend. I actually tried to go through the rationale, but it became too long, and will be another post entirely at some point. Just think of this from the point of the vendor (me) for a minute. We have to make configuration and maintenance recommendations for our customers. And we have to make them to fit thousands of customers. It is not an easy task to make general recommendations, because as you know, “It depends.”

</sidebar>

So why does it matter, in this case, that Auto Update Statistics is disabled?

Because when Auto Update Statistics is disabled, query plans will not recompile, even when statistics are updated.*

This was a shocker to me, and I have to thank Steve Smith ( b | t ) for pointing it out to me last fall during the SQLSaturday in Kalamazoo. He sent me a link on Execution Plan Caching and Reuse, and in the pretty yellow Note at the bottom it mentions this fact. Surprisingly, I haven’t found that same information in any of the other statistics-related documentation in BOL.

*To be clear, this is the relevant text, copied from the MSDN article:

When the AUTO_UPDATE_STATISTICS database option is SET to ON, queries are recompiled when they target tables or indexed views whose statistics have been updated or whose cardinalities have changed significantly since the last execution. This behavior applies to standard user-defined tables, temporary tables, and the inserted and deleted tables created by DML triggers. If query performance is affected by excessive recompilations, consider changing this setting to OFF. When the AUTO_UPDATE_STATISTICS database option is SET to OFF, no recompilations occur based on statistics or cardinality changes, with the exception of the inserted and deleted tables that are created by DML INSTEAD OF triggers.

Now, maybe everyone has Auto Update Statistics enabled and no one will ever have to worry about this problem, and this can become an inane fact that you know. But just in case, here’s a demo so you can see it in action. I commented the code along the way, and included screen shots where relevant.

USE master;

GO

/* create a new database for testing (optional) */

CREATE DATABASE [Stats]

ON PRIMARY

( NAME = N'Stats',

FILENAME = N'C:\Databases\SQL2008R2\Stats.mdf' ,

SIZE = 4096KB ,

FILEGROWTH = 1024KB )

LOG ON

( NAME = N'Stats_log',

FILENAME = N'C:\Databases\SQL2008R2\Stats_log.ldf' ,

SIZE = 1024KB ,

FILEGROWTH = 10% );

ALTER DATABASE [Stats] SET RECOVERY SIMPLE WITH NO_WAIT;

USE [Stats];

GO

/* turn off auto update statistics,

as it is enabled by default for new databases

*/

sp_dboption [Stats], 'auto update statistics', 'off';

GO

/* create a table and nonclustered index for testing */

CREATE TABLE dbo.mytable (

ID INT IDENTITY (1,1),

SomeValue INT);

CREATE NONCLUSTERED INDEX mytable_ID ON dbo.mytable (ID);

/* verify that no statistics exist for the index */

DBCC SHOW_STATISTICS ("dbo.mytable", mytable_ID) WITH STAT_HEADER

This is an abbreviated output from DBCC SHOW_STATISTICS, but you can see that there’s no statistics information available.

/* load some data */ SET NOCOUNT ON DECLARE @count INT = 1, @random INT = RAND()*100000; WHILE @count < 550 BEGIN INSERT INTO dbo.mytable (SomeValue) VALUES (@random); SET @count = @count + 1; SET @random = RAND()* 100000; END /* clear cache, just to make sure there are no query plans from previous testing hanging around */ DBCC FREEPROCCACHE /* run a selective query */ SELECT * FROM dbo.mytable WHERE ID = 549; /* get plan_handle */ SELECT p.usecounts, p.plan_handle, s.text FROM sys.dm_exec_cached_plans p OUTER APPLY sys.dm_exec_sql_text(p.plan_handle) s WHERE s.text LIKE '%dbo.mytable%'; /* use the plan_handle from the above query */ SELECT * FROM sys.dm_exec_query_plan(0x06000F0062117321B8A0FA07000000000000000000000000);

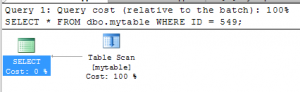

When we view the query plan, we see a full scan, which isn’t surprising since the table is so small.

/* load some more data */

SET NOCOUNT ON

DECLARE

@count INT = 1,

@random INT = RAND()*100000;

WHILE @count < 1000

BEGIN

INSERT INTO dbo.mytable (SomeValue) VALUES (@random);

SET @count = @count + 1;

SET @random = RAND()* 100000;

END

/*

run an update statement, which would would

normally invoke an update of statistics,

as 20% plus 500 rows are changing

*/

UPDATE dbo.mytable

SET SomeValue = 10000

WHERE ID IN (SELECT MAX(ID) FROM dbo.mytable);

/* verify that statistics were not updated */

DBCC SHOW_STATISTICS ("dbo.mytable", mytable_ID) WITH STAT_HEADER;

Statistics were not updated, because Auto Update is disabled.

Let’s update them manually and see what happens.

/* update statistics manually */

UPDATE STATISTICS dbo.mytable WITH FULLSCAN;

/* verify that statistics were updated */

DBCC SHOW_STATISTICS ("dbo.mytable", mytable_ID) WITH STAT_HEADER;

/* run the same selective query */ SELECT * FROM dbo.mytable WHERE ID = 549; /* check the plan_handle */ SELECT p.usecounts, p.plan_handle, s.text FROM sys.dm_exec_cached_plans p OUTER APPLY sys.dm_exec_sql_text(p.plan_handle) s WHERE s.text LIKE '%dbo.mytable%' /* the plan handle is the same, still a full scan */ SELECT * FROM sys.dm_exec_query_plan(0x06000F0062117321B8A0FA07000000000000000000000000);

Even though statistics were updated with 100% sample, because Auto Update Statistics was disabled, the query plans for dbo.mytable did not recompile, and they won’t until we either flush procedure cache, restart the instance, or enable Auto Update Statistics.

If you repeat the same steps, but this time with Auto Update Statistics enabled, you will see the query plan get removed from cache after statistics are updated (either automatically or manually).

/* clean up code */ USE master; GO DROP DATABASE [Stats];

Very good blog Erin. Perhaps you could touch on what DBA could do to address this issue in their databases??

Thanks Kevin! I definitely want to touch on what a DBA can do to address this – but I thought it was too much to put into this same post 🙂 Another one (SQL Sequel!) coming soon…

E

Sounds like a valid option would be to enable auto update stats right before you run the maintenance routine to update stats during non-prod hours. Once the maintenance completes, turn off the auto-update.

This way any query plans which depend on those stats will get recompiled, but you don’t suffer the hit of leaving auto-update running during the day.

Joe-

That’s an idea…you could also just clear procedure cache after updating stats, though I don’t know if that’s more dramatic then changing a database setting 🙂

Erin

Great post!!!

If you clear the objectplan cache, DBCC FREESYSTEMCACHE (‘Object Plans’), then you will have execution plans based on the

freshly updated statistics. This approach doesn’t impact on your logic behind disabling the auto-update.

You are of course going to get a CPU impact for all the recompiles and be susceptible to parameter sniffing.

Howver these issues would also be there if you had auto-update switched on.

This of course doesn’t even go into some of the other issues you may have such as..

>>The massive resources required for the 100% sample of all your tables

>>The risk of large updates during the day leading to the existing execution plans becoming obsolete.

I’ll have to test out DBCC FREESYSTEMCACHE, I usually use DBCC FREEPROCCACHE and am not familiar with the differences. Thanks for the suggestion – blog post on options is coming!

Hi Erin,

Meant to say that it was an excellent post. DBCC FREESYSTEMCACHE (‘Object Plans’) and DBCC FREEPROCCACHE are interchangeabled I believe.

Eoin

Great Post Erin!!

In our environment we don’t have any maintenance window so we don’t want to recompile all the SPs anytime. We just mark those sp’s for recompilation that are using tables we updated statistics for. I wrote a script couple of years ago which does following :

. Gets the table with highest rowcount changes from previous day.

. Updates statstics with fullscan.

. Find out SP’s using those tables by querying sql_modules.

. Mark those SPs for recompilation.

Thanks,

NJ

[…] recently blogged about an interesting behavior in SQL Server when the database option, Auto Update Statistics, is […]

[…] Statistics and Recompilations – A really good piece of writing and a not so well known gem of knowledge here from Erin Stellato (Blog|Twitter). […]

[…] http://erinstellato.com/2012/01/statistics-recompilations/ […]

[…] Erin Stellato (blog | twitter) first blogged about this here: Statistics and Recompilation. […]