Happy Friday everyone! Today’s post is the third and final for SQL University’s Internals week. Thank you to those of you that have been reading this week, it has been an honor to contribute to SQLU – thanks Jorge! ( blog | @sqlchicken )

For this last post I want to discuss the physical effects of deleting data from a table, and the inspiration for this post was a customer issue that I first encountered almost two years ago.

I was on site looking at system performance for one of our medium-sized customers. They had a 250 GB database at the time, and while the solution was performing pretty well overall, there was definitely room for improvement. I noticed a particular select statement that required over 27,000 reads, but only returned one row. I captured the execution plan for the query, and saw it was doing a full table scan. I was not surprised by this because the table is typically very small – only a few hundred rows at most. I did a count on the table and sure enough, there were only 66 rows in the table. How in the world do you get 25,000 reads against a table with 243 rows?

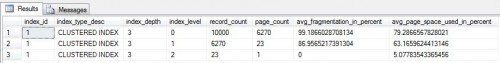

I used the sys.dm_db_index_physical_stats DMV to look at fragmentation, and there it was:

There were 27,325 pages in the table, and avg_page_space_used_in_percent was .07%. I would guess that for many of you, your first thought here is to rebuild the index. And you would be right, except this table was heap.

Deletes from a heap are managed differently than deletes from a table with a clustered index. When you delete a row from a heap, it changes the offset for that slot to 0. The data on the page is not actually removed (you can verify using DBCC PAGE). If all the rows on a page are deleted, the page still stays allocated to the heap. Even if you delete every row from the table, all of those pages remain allocated to the heap.

When you delete a row from a clustered index, the row is marked as a ghost record, and eventually gets cleaned up by the ghost-cleanup thread. If you delete all the rows from a page in a clustered index, the page is deallocated. The page could be re-used by the same clustered index in the future (assuming it’s in a dedicated extent), but if all pages in an extent were deallocated, then the extent would be deallocated from the clustered index, and could then be allocated to a different object later. If you delete every row from a clustered index, one page will still be allocated to it, even though it is empty.

Let’s take a look at this in action (scripts assume you are working in the Movies database, which we started in the last post). First we will create a table, then add 10,000 rows.

CREATE TABLE dbo.CurrentMoviesHeap

(MovieName CHAR (4000),

ReleaseDate SMALLDATETIME,

NumberTheaters INT,

EndDate SMALLDATETIME);

SET NOCOUNT ON

DECLARE @value INT = 1;

WHILE @value<10001

BEGIN

INSERT INTO dbo.CurrentMoviesHeap (MovieName, ReleaseDate, NumberTheaters, EndDate) VALUES

('Movie' + cast(@value as varchar(5)),GETDATE(), rand() * @value,GETDATE() + 30);

SET @value = @value + 1;

END

We can use sys.dm_db_index_physical_stats to look at the table information:

SELECT index_id, index_type_desc, index_depth, index_level, record_count, page_count, avg_fragmentation_in_percent, avg_page_space_used_in_percent FROM sys.dm_db_index_physical_stats(DB_ID(N'Movies'), OBJECT_ID(N'dbo.CurrentMoviesHeap'), NULL, NULL , 'DETAILED');

The pages are pretty full at this point, and there are 5000 pages allocated to the table. Now we will delete some rows and then run the physical_stats query again:

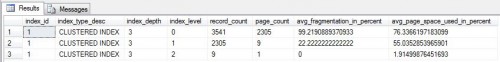

SET NOCOUNT ON DECLARE @value INT = 1; WHILE @value < 3001 BEGIN DELETE FROM dbo.CurrentMoviesHeap WHERE NumberTheaters BETWEEN (RAND()*@value) AND (RAND()*(@value + 10)); SET @value = @value + 1; END

We deleted well over half the table, and all of the pages are still assigned. Boo. Let’s delete more data:

SET NOCOUNT ON DECLARE @value INT = 5002; WHILE @value < 9001 BEGIN DELETE FROM dbo.CurrentMoviesHeap WHERE NumberTheaters BETWEEN (RAND()*@value) AND (RAND()*(@value + 10)); SET @value = @value + 100; END

Yuck. There are only 115 rows in the table, and still 5000 pages allocated. Let’s see how a table with a clustered index compares. We will again create a table and load data into it the same way.

CREATE TABLE dbo.CurrentMoviesClusteredIndex

(MovieName CHAR (4000),

ReleaseDate SMALLDATETIME,

NumberTheaters INT,

EndDate SMALLDATETIME)

CREATE CLUSTERED INDEX CIX_NumberTheaters ON dbo.CurrentMoviesClusteredIndex(NumberTheaters)

SET NOCOUNT ON

DECLARE @value INT = 1;

WHILE @value<10001

BEGIN

INSERT INTO dbo.CurrentMoviesClusteredIndex (MovieName, ReleaseDate, NumberTheaters, EndDate) VALUES

('Movie' + cast(@value as varchar(5)),GETDATE(), rand() * @value,GETDATE() + 30);

SET @value = @value + 1;

END

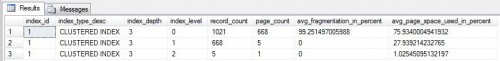

If we look at the output from sys.dm_db_index_physical_stats we see that the pages are not quite as filled as the heap (there’s over 1200 more pages).

I used the same script that I used for the heap to delete about 6500 rows, and if we check physical stats again, we see that the page count has dropped by 60%; the empty pages have been deallocated.

If we run the second script that deletes data and look at physical stats, the number of rows in the table is about 1000, and there are under 700 pages in the table. This is drastically different behavior than what we see with the heap.

- physical_stats output for clustered index after deleting more data

Something worth noting about the Optimizer… Even if I had a nonclustered index on the table, the Optimizer probably would have still chosen a table scan because there were so few rows in the table. The customer updated statistics weekly with 100% sample, and to the Optimizer, there were only 66 rows in the table. The table scan would seem more efficient because the Optimizer had no way of knowing that there were over 27,000 empty pages allocated to the table that it had to read. So what did we do for the customer? We did what you are probably hoping we did…we put a clustered index on the table.

I do realize that not everyone may be able to just add a clustered index to a table. In the event that you cannot add a clustered index, you have two options; neither of them are ideal.

If you are running SQL Server 2005 or earlier, you could put a clustered index on the table and then drop it. I can hear Paul Randal yelling “Noooooo!” now. Don’t believe me? Go read his post, “A SQL Server DBA myth a day: (29/30) fixing heap fragmentation.” Your other option, if you’re running SQL 2008 or higher, is to rebuild the table. Neither of these is a good option because you should probably have a clustered index on the table, and if you can add one, make sure you choose a good clustering key. The aforementioned options are just plain horrible because the nonclustered indexes have to be rebuilt (twice, if you add and then drop the clustered index). Paul does a good job of explaining this in his post, but the short explanation is that nonclustered indexes for a heap have the RID (rowID) in the leaf level to go look up the row in the heap. When you add a clustered index, you now have a clustering key, and the nonclustered indexes have to be rebuilt to put the clustering key in the leaf level. If you then go and drop the clustered index, those nonclustered indexes have to be rebuilt all over again to put the RID in the leaf level. That’s a lot of I/O my friends. If you are running SQL 2005, you have the option of disabling the nonclustered indexes before you add and drop the clustered index. With this method, you only have to rebuild the nonclustered indexes once. Check out my post on disabling indexes if you want to explore that option.

If you find any good row:page ratios in your heaps, let me know! After we bemoan the I/O effects all those empty pages, we can talk about a strategy for putting a clustered index on the heap. I hope you’ve enjoyed Internals week at SQL University, and good luck on your finals!

[…] Twitter ) has some excellent posts on this subject as well as Erin’s recent blog post on Internals and Deletes for SQL University which I highly recommend reading. Also worth mentioning is that Oracle has a default […]

[…] http://erinstellato.com/2011/05/sql-university-internals-deletes/ […]