For the past few months I have been following a customer issue that started way back in November of last year (2010). The customer is one of my personal favorites. Our application is business critical in their environment. If it isn’t available, employees have nothing to do. The database is large – nearly a terabyte – and interestingly enough they do not have a full time DBA. What they do have is an amazing team. One of the administrators for our application also serves as the DBA, and for not spending a ton of time learning SQL Server, he knows quite a bit about SQL Server. He is extremely smart. The rest of the team consists of another application administrator and individuals from the infrastructure team that have deep knowledge of their storage (SAN) and Virtual environment. The database server is virtualized, and they are running Windows 2008 R2 on the guest, and SQL Server 2008 R2.

The administrator contacted me in December because the database had gone down four or five times and the following errors appeared in the log file:

The log for database ‘CustomerDB’ is not available. Check the event log for related error messages. Resolve any errors and restart the database.

The operating system returned error 1117(The request could not be performed because of an I/O device error.) to SQL Server during a write at offset 0x00000003f60000 in file ‘M:MSSQLCustomer_Data.MDF’. Additional messages in the SQL Server error log and system event log may provide more detail. This is a severe system-level error condition that threatens database integrity and must be corrected immediately. Complete a full database consistency check (DBCC CHECKDB). This error can be caused by many factors; for more information see SQL Server Books Online.

Database CustomerDB was shutdown due to error 9001 in routine ‘XdesRMFull::Commit’. Restart for non-snapshot databases will be attempted after all connections to the database are aborted.

I had never seen those errors, and the first thing I asked was the date of their last successful backup, and the date of the last clean CHECKDB. My next thought, as I looked at the errors, was that it was an issue with the storage system, or the path between the database server and the storage. I asked the customer about that, and was informed that both the VM and storage teams had been looking into it and hadn’t found anything.

I did some searching online, which turned up very little, and then tweeted using the #sqlhelp hashtag (have I ever mentioned how much I love Twitter?). Jonathan Kehayias ( blog | @SQLPoolBoy ) immediately responded and asked for more details about their configuration, and he also asked me to send along the error log, which I did after I scrubbed all customer information. He came back and asked if he could forward it to Amit Banerjee ( blog | @banerjeeamit ), to have him take a look as well.

I explained my line of thinking to Jonathan, and he commented that he wouldn’t be surprised if there was a bad fiber optic cable, dust in the connection, etc.

Amit pointed out the 1117 error, and that it appeared multiple times in the Errorlog. He stated that there should be other NTFS or other disk-related errors in the Windows Event Logs, and believed the errors were a sign of a storage subsystem issue. He recommended a complete CHECKDB of all databases on drive M.

I took Jonathan’s and Amit’s input and relayed it back to the customer, and explained that I had been in contact with a SQL Server MVP and a member of Microsoft’s SQL Server Escalation Team, respectively. I also recommended officially engaging Microsoft, because this was an unusual error, and also had large impact for them. They are a 24/7 solution and random shutdowns of the database are not acceptable. They agreed and contacted Microsoft. They also engaged their SAN vendor and VMWare. These guys don’t mess around, which I love. No one tried to blame anyone, no tried to push responsibility to another team, they all jumped in and asked, “Where do we start?”

As an interesting side note, there were no errors in the Windows Event Log that could be correlated to the aforementioned 1117 error.

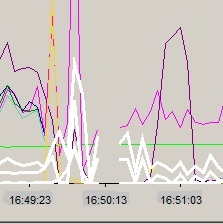

I had contact with their admin over the next few weeks, checking for updates. The issue bounced around a bit. The first Microsoft rep suspected corruption in the log file, but that did not pan out, and it went from the SQL Server team over to the Windows team for a while, and then back to the SQL Server team. Eventually the SQL Server team had them run PSSDIAG and SQLIOSIM, and one thing they noticed was gaps in the PerfMon data:

The issue escalated in February when the problem occurred three times within an hour. The customer had captured PSSDIAG data during several instances of the problem, and then new errors started to show up in the logs:

The log for database ‘CustomerDB’ is not available. Check the event log for related error messages. Resolve any errors and restart the database.

Error: 9001 Severity: 21 State: 4.

Write error during log flush.

LogWriter: Operating system error 1117(The request could not be performed because of an I/O device error.) encountered.

Error: 17053 Severity: 16 State: 1.

The background checkpoint thread has encountered an unrecoverable error. The checkpoint process is terminating so that the thread can clean up its resources. This is an informational message only. No user action is required.

Error: 18400 Severity: 16 State: 1.

The operating system returned error 1117(The request could not be performed because of an I/O device error.) to SQL Server during a write at offset 0x000000752c8000 in file ‘R:MSSQLCustomer_file.ndf’. Additional messages in the SQL Server error log and system event log may provide more detail. This is a severe system-level error condition that threatens database integrity and must be corrected immediately. Complete a full database consistency check (DBCC CHECKDB). This error can be caused by many factors; for more information see SQL Server Books Online.

Error: 823 Severity: 24 State: 3.

When they shared these errors with me, I nearly fainted. THERE WAS AN 823 ERROR IN THERE! I was certain there was corruption in the database.

Microsoft was concerned about this as well, the customer ran CHECKDB and everything was ok. Whew. Soon after Microsoft had them apply SP1 for Windows 2008 R2, and also follow the steps in this article to improve the logging capabilities of the Storport.sys driver to hopefully capture more information about the issue.

Finally, in mid-February I got an email asking if I could participate in a conference call with Microsoft, VMWare and the customer. During the call, I didn’t have much to contribute as the problem was really just manifesting in our application, but it ended up being the call where they resolved the issue.

VMWare reported they had just solved a somewhat similar issue for another customer, where they had the customer change the SCSI controller from PVSCSI (Para Virtualized SCSI) controller to LSI on the VMWare host. Our customer’s environment had been configured to use PVSCSI adapters for the entire time we had been working with them (including when they were on Windows 2003). The PVSCSI adapters are high-performance storage adapters that provide high throughput with lower CPU utilization. VMWare recommended changing to LSI adapter (and noted that performance should not degrade). The customer made the change, and just like that, the problem was resolved.

Microsoft recently published a KB article which describes this issue: SQL Server reports Operating system error 1117 (I/O Device Error) on VMWare ESX environments configured to use PVSCSI adapters.

Today, things at the customer site are running well, and they’re back to tweaking their system, managing the maintenance plans and keeping an eye on performance. I give credit to all the groups involved in this one. Never once did any group point the finger at the other, and it was great example of teamwork and persistence in getting the issue resolved. While I had no involvement in the resolution, it is my hope that their work can prevent (or at least quickly diagnose) this issue for other customers.

To Jonathan and Amit, thank you for taking the time to look at the logs and offer ideas. To the team at this customer site that might read this post: Thanks for always keeping us in the loop, and for agreeing to let me post this. Hope to see you soon!

What a great story! This is so true about how some really troubling issues can take a long time to resolve because the symptom can point to so many different things. It’s really a great team that can go through that and work together the whole time– that’s a success to celebrate.

Kendra-

You make a good point, I don’t talk about how long it takes to solve the problem, but it really did take a while. And everyone persevered until they solved the problem. Excellent point, worth another post at some point 🙂

Thanks for the comment, and congrats again on the new gig!!

E

We have the same issue. We’are going to try to change the SCSI from Paravirtual to LSI, althought we are really worried about performance. Great post and really well explained.

Javier-

I am glad you found the post useful. I hope the change helps the issue and doesn’t affect performance (it did not in this customer’s case). Let me know!

Erin

Hi, we having the same error on a tiny 30MB database. However ours is not a virtual environment but physical with fail-over clustering for SQL Server 2008 R2. The instance herewith also host a couple of other databases, with the error only encountered on just this database.

Our temporary way around is taking the database offline then back online, and all is well there after but i am looking for a permanent fix.